How NYC hospitals are using artificial intelligence to save lives

June 27, 2023, 6 a.m.

High-tech models help identify at-risk patients and even recommend surgeries.

Artificial intelligence is changing the way we learn and work. But New Yorkers may not realize that similar algorithms are already aiding lifesaving decisions at hospitals every day.

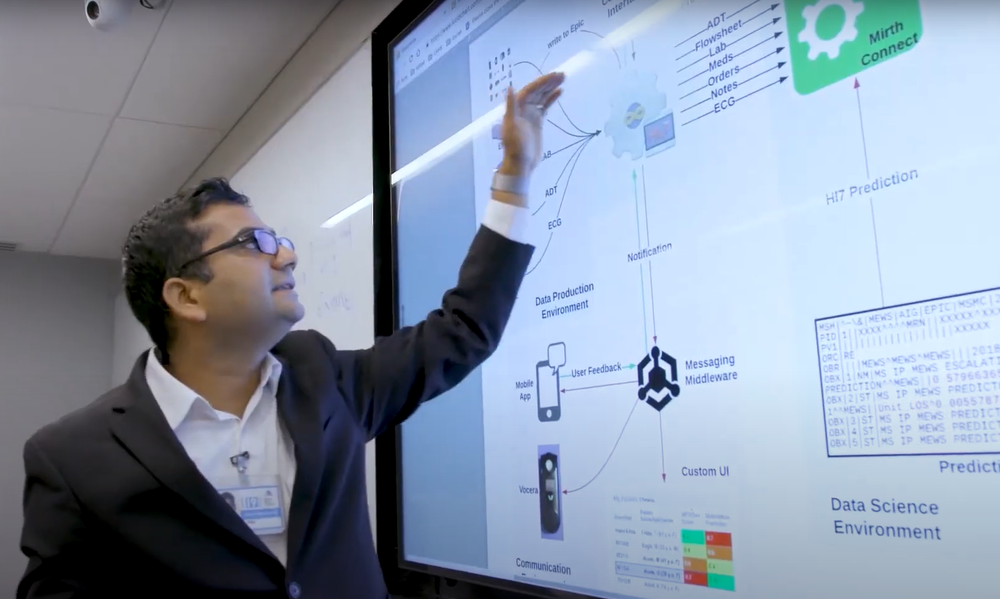

Staff at three New York City hospital systems say that in mere seconds, artificial intelligence tools can gauge a patient’s odds of malnutrition, delirium, ICU admission and even death — well before a doctor even enters the hospital room.

Some AI programs take on administrative tasks, such as helping patients book appointments or request refills without the hassle of playing phone tag. Other systems can give tailored pregnancy advice or even identify signs of diseases like breast cancer.

They can’t perform physical exams – yet. But staff at three New York City hospital systems say these AI systems are helping prioritize specialists’ time, guide medical decision-making and even identify signs of breast cancer. Clinicians discussed these applications at a summit held in late May by the New York Academy of Sciences.

We’re able to identify patients who otherwise wouldn’t be spotted.

Sara Wilson, senior director of clinical nutrition services, Mount Sinai Health System

“We’re able to identify patients who otherwise wouldn’t be spotted,” Sara Wilson, senior director of clinical nutrition services at Mount Sinai Health System, said of one tool that flags patients at risk of malnutrition. “Your brain just can’t move as fast as this machine can and touch all the metrics that a machine can.”

This malnutrition tool is what’s known as a predictive model, and it doesn't work all that differently from an experienced clinician. This type of AI scans through patients’ charts, paying special attention to factors that may predispose each individual to under-nourishment, like weight and bloodwork. If a patient’s risk factors pass a certain threshold, they get flagged for a follow-up visit with a registered dietitian.

The key advantage of AI tools, hospital staff explained, is speed. They can sift through records in the fraction of time it would take a human to do so. That frees up doctors and nurses to spend more time actually interacting with patients, Wilson said.

That speed also means that doctors can more quickly identify and start treating the patients who need it most. For conditions like delirium — a state of confusion that can signal health problems in older patients — that efficiency is essential, explained Mount Sinai’s Dr. Joseph Friedman. Before they started using their AI model, he said, “we weren’t able to engage in the kind of aggressive and decisive treatment of patients to bring them out of this delirium because of all the time we were spending assessing them.”

Now, they’re able to target more at-risk patients with fewer screenings and get them on the road to recovery that much faster. Before the tool was up and running, he said, clinicians would have to screen up to 100 people per day to identify even two delirious patients. Now, the AI program can identify as many as 10 patients for every 25 screened per day.

Other AI tools can even identify patients in need of life-saving time-sensitive procedures. New York City Health + Hospitals uses an algorithm to canvass brain scans of stroke patients. The system quickly flags patients who are good candidates for surgery to remove the clot.

A care planning tool, deployed by NYU Langone, estimates a patient’s chances of dying within the next two months.

“Those are the people you’d want to identify quickly,” said Dr. Michael Bouton, chief medical information officer for the public hospital system.

Some hospitals are also experimenting with chatbots for patients to interact with — both for everyday tasks like scheduling and actual medical care. Mount Sinai has a chatbot that guides anxious patients who are trying to decide between making a regular doctor’s appointment, visiting a local urgent care or heading to an emergency room, said Robbie Freeman, chief nursing informatics officer for the health system. Separately, Northwell Health launched an AI-enhanced pregnancy chat app earlier this year to screen for common symptoms and give users personalized advice. The app can also connect users to a human health care worker or even prompt them to call 911 if their responses call for it — for example, if they’re describing symptoms of preeclampsia or thoughts of self-harm.

Hospital staff interviewed by Gothamist say they’re excited about the potential of large language models like ChatGPT that can generate text in response to carefully crafted prompts. The systems could help health care providers with writing tasks, including talking to patients.

But AI isn’t immune to errors. One tool, designed to predict the deadly infection response known as sepsis, had to be extensively redesigned after researchers and journalists flagged flaws in its detection system. Depending on how the computer programs are trained, AI can also replicate biases inherent to their human makers. NYC Health + Hospitals’ Bouton explained that the hospital system decided not to use a tool for predicting which patients were most likely to miss their scheduled appointments, citing bias concerns.

Health + Hospitals and the other health providers interviewed for this story all said that their AI tools must be approved by an ethics committee and thoroughly tested before they’re deployed.

That’s especially important for tools that predict patients’ decline or death. A care planning tool that estimates patients’ odds of dying within the next two months required especially rigorous discussion, Nader Mherabi, chief information officer at NYU Langone, said in an email. The tool prompts doctors to talk to high-risk patients about their end-of-life wishes and treatment preferences, which can then be documented in a living will or other instructions.

Representatives for New York City Health + Hospitals, Mount Sinai Health System and NYU Langone Health all stressed that the AI tools are simply informing medical professionals’ decision-making, not supplanting it.

“Humans are always in the loop,” Mehrabi said. The models are just one factor in “a sea of data points,” considered by each health care provider, he added.

For all the hype and doomsday labor predictions, doctors and other clinicians aren’t worried about AI coming for their careers anytime soon — particularly when the job requires physical interaction.

“The AI serves us. We don’t serve it,”said Friedman. “It’s not like a Star Trek holographic doctor who’s making decisions. We’re simply not there."

Election misinfo, AI-proof jobs and ChatGPT in schools: How AI could reshape NYC New York has new rules on AI hiring tools. Here’s how the changes might help or hinder your job prospects.